Overall architecture of NNToolChain

NNToolchain of SOPHONSDK is the in-depth learning tool chain that Sophon customized based on the independently developed AI chip. It covers the abilities required in neural network (NN) reasoning period, such as model optimization and efficient running, and could provide easy-to-use and efficient full-stack solution for the application development and deployment of in-depth learning.

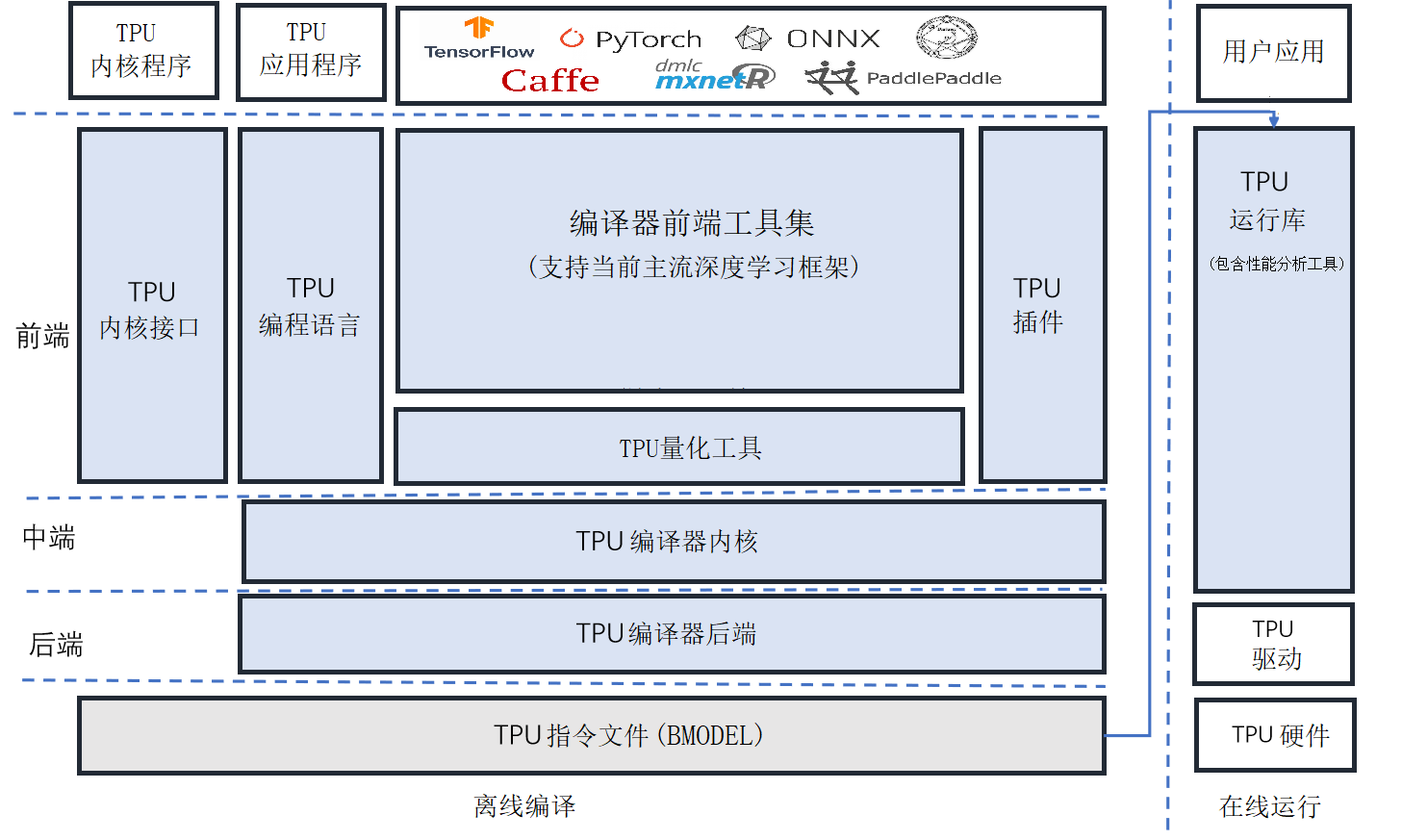

See the figure below for the overall architecture of NNToolchain, which consists of Compiler and Runtime (the left and right ones in the figure). Compiler is used for compiling and optimizing various mainstream in-depth NNM, such as Caffe model and Tensorflow model. Runtime shields bottom layer hardware downward in order to realize details, drive TPU chips and provide central programmable interface for application program upward. It not only provides NN-based reasoning function but also accelerates DNN and CV algorithms.

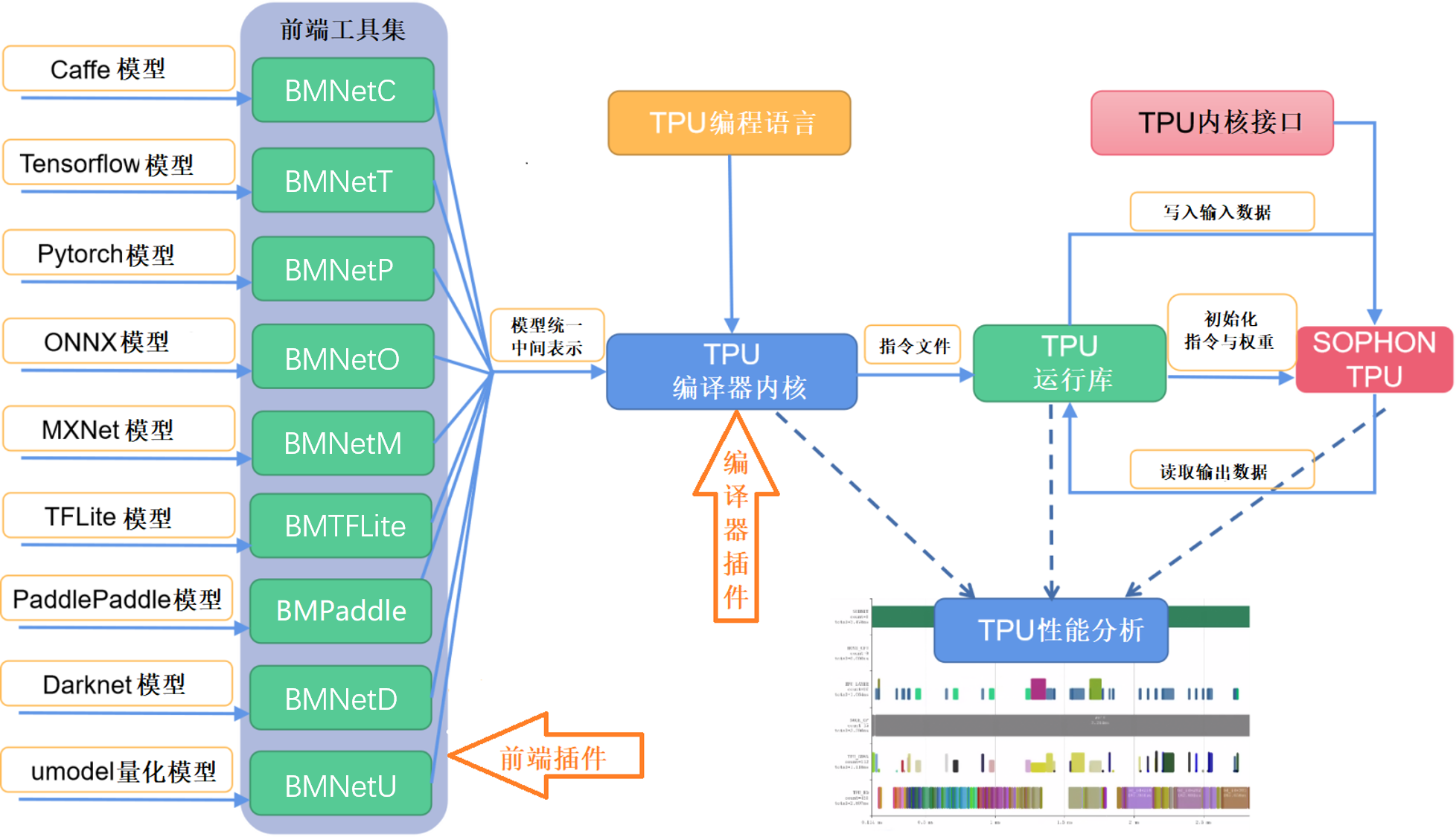

See the figure below for the working process of NNToolChain. First, Compiler converts the mainstream framework model into the format of model that can be recognized by TPU——bmodel. Then, Runtime reads bmodel, writes in data into TPU for NN-based reasoning and then reads back TPU processing results. Besides, TPU programming language is also allowed to build self-defined upper-layer operator and network.The kernel interface of TPU can be programmed directly on TPU equipment. Performance profiling can also be carried out against model in virtue of TPU performance analysis tools. User is allowed to expand front end and optimize Pass in virtue of relevant plugin mechanism.