5. 通用yolov7模型部署¶

5.1. 引言¶

本文档介绍了如何将yolov7架构的模型部署在cv181x开发板的操作流程,主要的操作步骤包括:

yolov7模型pytorch版本转换为onnx模型

onnx模型转换为cvimodel格式

最后编写调用接口获取推理结果

5.2. pt模型转换为onnx¶

下载官方[yolov7](https://github.com/WongKinYiu/yolov7)仓库代码

git clone https://github.com/WongKinYiu/yolov7.git

在上述下载代码的目录中新建一个文件夹weights,然后将需要导出onnx的模型移动到yolov7/weights

cd yolov7 & mkdir weights

cp path/to/onnx ./weights/

然后将yolo_export/yolov7_export.py复制到yolov7目录下

然后使用以下命令导出TDL_SDK形式的yolov7模型

python yolov7_export.py --weights ./weights/yolov7-tiny.pt

小技巧

如果输入为1080p的视频流,建议将模型输入尺寸改为384x640,可以减少冗余计算,提高推理速度,如下命令所示:

python yolov7_export.py --weights ./weights/yolov7-tiny.pt --img-size 384 640

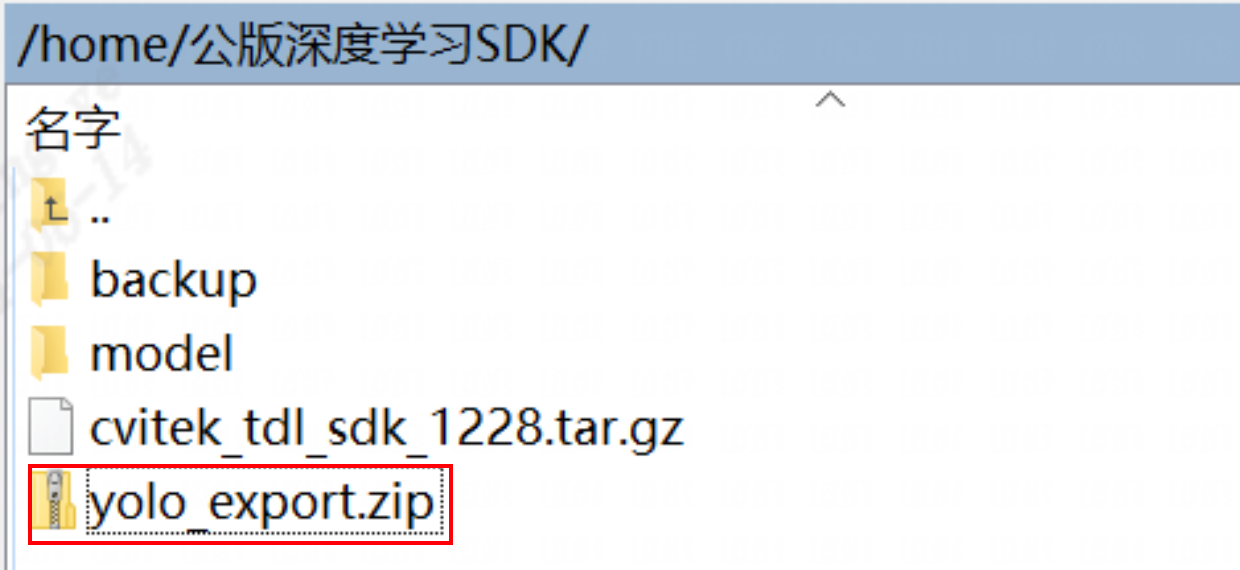

yolo_export中的脚本可以通过SFTP获取:下载站台:sftp://218.17.249.213 帐号:cvitek_mlir_2023 密码:7&2Wd%cu5k

通过SFTP找到下图对应的文件夹:

5.3. onnx模型转换cvimodel¶

cvimodel转换操作可以参考yolo-v5移植章节的onnx模型转换cvimodel部分。

小心

yolov7官方版本的模型预处理参数,即mean以及scale与yolov5相同,可以复用yolov5转换cvimodel的命令

5.4. TDL_SDK接口说明¶

yolov7模型与yolov5模型检测与解码过程基本类似,主要不同是anchor的不同

小心

注意修改anchors为yolov7的anchors!!!

- anchors:

[12,16, 19,36, 40,28] # P3/8

[36,75, 76,55, 72,146] # P4/16

[142,110, 192,243, 459,401] # P5/32

预处理接口设置如下代码所示

// set preprocess and algorithm param for yolov7 detection

// if use official model, no need to change param

CVI_S32 init_param(const cvitdl_handle_t tdl_handle) {

// setup preprocess

YoloPreParam preprocess_cfg =

CVI_TDL_Get_YOLO_Preparam(tdl_handle, CVI_TDL_SUPPORTED_MODEL_YOLOV7);

for (int i = 0; i < 3; i++) {

printf("asign val %d \n", i);

preprocess_cfg.factor[i] = 0.003922;

preprocess_cfg.mean[i] = 0.0;

}

preprocess_cfg.format = PIXEL_FORMAT_RGB_888_PLANAR;

printf("setup yolov7 param \n");

CVI_S32 ret =

CVI_TDL_Set_YOLO_Preparam(tdl_handle, CVI_TDL_SUPPORTED_MODEL_YOLOV7, preprocess_cfg);

if (ret != CVI_SUCCESS) {

printf("Can not set Yolov5 preprocess parameters %#x\n", ret);

return ret;

}

// setup yolo algorithm preprocess

YoloAlgParam yolov7_param = CVI_TDL_Get_YOLO_Algparam(tdl_handle, CVI_TDL_SUPPORTED_MODEL_YOLOV7);

uint32_t *anchors = new uint32_t[18];

uint32_t p_anchors[18] = {12, 16, 19, 36, 40, 28, 36, 75, 76,

55, 72, 146, 142, 110, 192, 243, 459, 401};

memcpy(anchors, p_anchors, sizeof(p_anchors));

yolov7_param.anchors = anchors;

uint32_t *strides = new uint32_t[3];

uint32_t p_strides[3] = {8, 16, 32};

memcpy(strides, p_strides, sizeof(p_strides));

yolov7_param.strides = strides;

yolov7_param.cls = 80;

printf("setup yolov7 algorithm param \n");

ret = CVI_TDL_Set_YOLO_Algparam(tdl_handle, CVI_TDL_SUPPORTED_MODEL_YOLOV7, yolov7_param);

if (ret != CVI_SUCCESS) {

printf("Can not set Yolov5 algorithm parameters %#x\n", ret);

return ret;

}

// set thershold

CVI_TDL_SetModelThreshold(tdl_handle, CVI_TDL_SUPPORTED_MODEL_YOLOV7, 0.5);

CVI_TDL_SetModelNmsThreshold(tdl_handle, CVI_TDL_SUPPORTED_MODEL_YOLOV7, 0.5);

printf("yolov7 algorithm parameters setup success!\n");

return ret;

}

推理接口如下所示:

ret = CVI_TDL_OpenModel(tdl_handle, CVI_TDL_SUPPORTED_MODEL_YOLOV7, model_path.c_str());

if (ret != CVI_SUCCESS) {

printf("open model failed %#x!\n", ret);

return ret;

}

std::cout << "model opened:" << model_path << std::endl;

VIDEO_FRAME_INFO_S fdFrame;

ret = CVI_TDL_ReadImage(str_src_dir.c_str(), &fdFrame, PIXEL_FORMAT_RGB_888);

std::cout << "CVI_TDL_ReadImage done!\n";

if (ret != CVI_SUCCESS) {

std::cout << "Convert out video frame failed with :" << ret << ".file:" << str_src_dir

<< std::endl;

// continue;

}

cvtdl_object_t obj_meta = {0};

CVI_TDL_Yolov7(tdl_handle, &fdFrame, &obj_meta);

for (uint32_t i = 0; i < obj_meta.size; i++) {

printf("detect res: %f %f %f %f %f %d\n", obj_meta.info[i].bbox.x1, obj_meta.info[i].bbox.y1,

obj_meta.info[i].bbox.x2, obj_meta.info[i].bbox.y2, obj_meta.info[i].bbox.score,

obj_meta.info[i].classes);

}

5.5. 测试结果¶

测试了yolov7-tiny模型各个版本的指标,测试数据为COCO2017,其中阈值设置为:

conf_threshold: 0.001

nms_threshold: 0.65

分辨率均为640 x 640

yolov7-tiny模型的官方导出方式性能:

测试平台 |

推理耗时 (ms) |

带宽 (MB) |

ION(MB) |

MAP 0.5 |

MAP 0.5-0.95 |

|---|---|---|---|---|---|

pytorch |

N/A |

N/A |

N/A |

56.7 |

38.7 |

cv180x |

ion分配失败 |

ion分配失败 |

38.97 |

量化失败 |

量化失败 |

cv181x |

75.4 |

85.31 |

17.54 |

量化失败 |

量化失败 |

cv182x |

56.6 |

85.31 |

17.54 |

量化失败 |

量化失败 |

cv183x |

21.85 |

71.46 |

16.15 |

量化失败 |

量化失败 |

cv186x |

7.91 |

137.72 |

23.87 |

量化失败 |

量化失败 |

yolov7-tiny模型的TDL_SDK导出方式性能:

测试平台 |

推理耗时 (ms) |

带宽 (MB) |

ION(MB) |

MAP 0.5 |

MAP 0.5-0.95 |

|---|---|---|---|---|---|

onnx |

N/A |

N/A |

N/A |

53.7094 |

36.438 |

cv180x |

ion分配失败 |

ion分配失败 |

36.81 |

ion分配失败 |

ion分配失败 |

cv181x |

70.41 |

70.66 |

15.43 |

53.3681 |

32.6277 |

cv182x |

52.01 |

70.66 |

15.43 |

53.3681 |

32.6277 |

cv183x |

18.95 |

55.86 |

14.05 |

53.3681 |

32.6277 |

cv186x |

6.54 |

99.41 |

17.98 |

53.44 |

33.08 |