5. Front-end Conversion

This chapter takes the onnx model as an example to introduce the front-end conversion process of models/operators in this project.

5.1. Main Work

The front-end is mainly responsible for transforming the original model into a Top (chip-independent) mlir model (without the Canonicalize part, so the generated file is named “*_origin.mlir”). This process creates and adds the corresponding operators (Op) based on the original model and the input arguments when running model_transform.py. The transformed model and weights will be saved in mlir and npz file respectively.

5.2. Workflow

Prereq: definition of the Top layer operator in TopOps.td.

Input: input the original onnx model and arguments (preprocessing arguments mainly).

Initialize OnnxConverter (load_onnx_model + initMLIRImporter).

load_onnx_model part is mainly to refine the model, intercept the model according to the output_names in arguments, and extract the relevant information from the refined model.

The init_MLIRImporter part generates the initial mlir text.

generate_mlir

Create the input op, the model intermediate nodes op and the return op in turn and add them to the mlir text (if the op has tensors, additional weight op will be created).

Output

Save the simplified model as a “*_opt.onnx” file

Generate a “.prototxt” file to save the model information except the weights

Convert the generated text to str and save it as a “.mlir” file

Save model weights (tensors) in “.npz” file

The workflow of the front-end conversion is shown in the figure (Front-end conversion workflow).

Fig. 5.1 Front-end conversion workflow

- Additional Notes:

Build input op requires:

input_names.index for each input.

preprocessing arguments (if the input is an image).

Convert nodes op requires:

former ops.

the output_shape from

shapes.attrs extracted from the onnx node. Attrs are set by MLIRImporter according to definition in TopOps.td.

Build return op requires:

output ops according to

output_names.Insertion operation is performed for each op conversion or creation. The operator is inserted into the mlir text so that the final generated text can one-to-one correspond with the original onnx model.

5.3. Example

This section takes the Conv onnx operator as an example for Top mlir conversion. The original model is shown in the figure (Conv onnx model).

Fig. 5.2 Conv onnx model

The conversion process:

Conv op definition

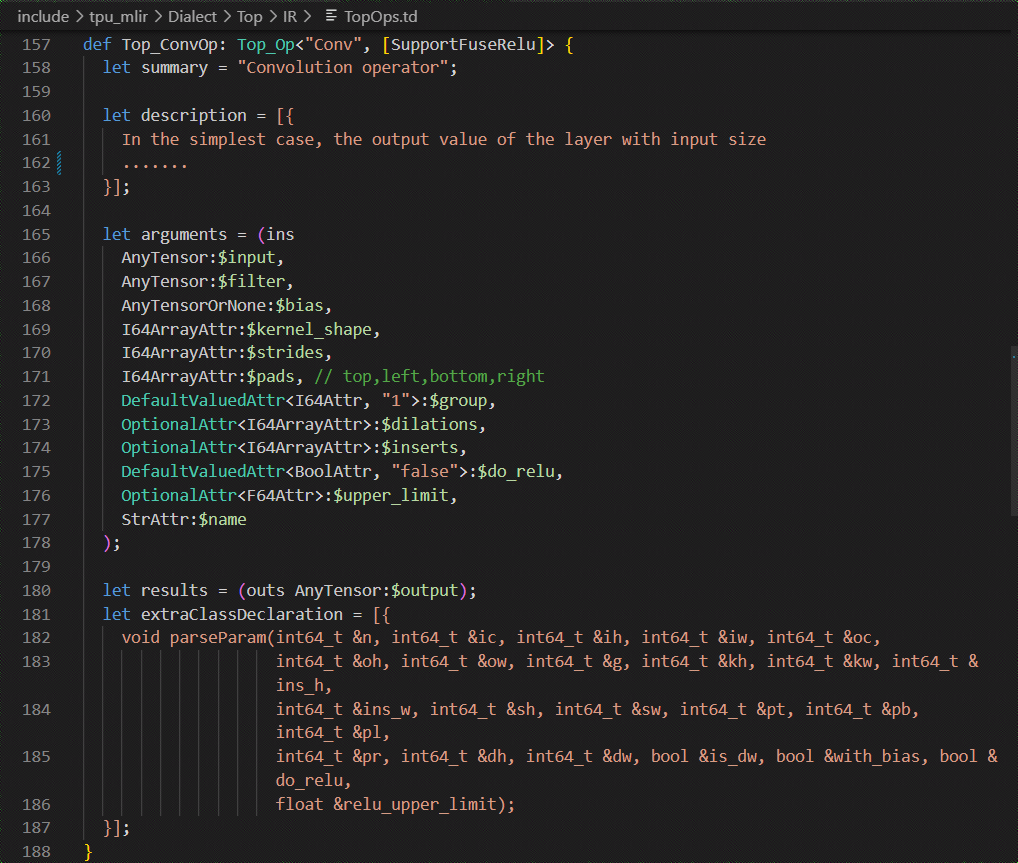

Define the Top.Conv operator in TopOps.td. The definition is shown in the figure (Top.Conv definition).

Fig. 5.3 Top.Conv definition

Initialize OnnxConverter

load_onnx_model:

Since this example uses the simplest model, the resulting Conv_opt.onnx model is the same as the original one.

input_namesfor saving input name “input” of Conv op.The weight and bias of the Conv op are stored in

tensors.

shapessaves input_shape and output_shape of conv op.

output_namesholds the output name of the Conv op “output”.init_MLIRImporter:

The initial mlir text MLIRImporter.mlir_module is generated based on model name, input shape and output shape from

shapes, as shown in the figure (Initial mlir text).

Fig. 5.4 Initial mlir text

generate_mlir

build input op, the generated Top.inputOp will be inserted into MLIRImporter.mlir_module.

call convert_conv_op(), which calls MLIRImporter.create_conv_op to create a ConvOp, and the create function takes the following arguments.

inputOp: from (Conv onnx model), we can see that inputs of the Conv operator contain input, weight and bias. inputOp has been created, and the op of weight and bias will be created by getWeightOp().

output_shape: use onnx_node.name to get the output shape of the Conv operator from

shapes.Attributes: get attributes such as (Conv onnx model) from the onnx Conv operator.

The attributes of the Top.Conv operator are set according to the definition in (Top.Conv definition). Top.ConvOp will be inserted into the MLIR text after it is created.

Get the output op from

operandsbased onoutput_namesto create return_op and insert it into the mlir text. Up to this point, the generated mlir text is shown (Complete mlir text).

Fig. 5.5 Complete mlir text

Output

Save the mlir text as Conv_origin.mlir and the weights in the

tensorsas Conv_TOP_F32_all_weight.npz.