3. Compile the ONNX model

This chapter takes yolov5s.onnx as an example to introduce how to compile and transfer an onnx model to run on the BM1684X TPU platform.

The model is from the official website of yolov5: https://github.com/ultralytics/yolov5/releases/download/v6.0/yolov5s.onnx

This chapter requires the following files (where xxxx corresponds to the actual version information):

tpu-mlir_xxxx.tar.gz (The release package of tpu-mlir)

3.1. Load tpu-mlir

The following operations need to be in a Docker container. For the use of Docker, please refer to Setup Docker Container.

1$ tar zxf tpu-mlir_xxxx.tar.gz

2$ source tpu-mlir_xxxx/envsetup.sh

envsetup.sh adds the following environment variables:

Name |

Value |

Explanation |

|---|---|---|

TPUC_ROOT |

tpu-mlir_xxx |

The location of the SDK package after decompression |

MODEL_ZOO_PATH |

${TPUC_ROOT}/../model-zoo |

The location of the model-zoo folder, at the same level as the SDK |

envsetup.sh modifies the environment variables as follows:

1export PATH=${TPUC_ROOT}/bin:$PATH

2export PATH=${TPUC_ROOT}/python/tools:$PATH

3export PATH=${TPUC_ROOT}/python/utils:$PATH

4export PATH=${TPUC_ROOT}/python/test:$PATH

5export PATH=${TPUC_ROOT}/python/samples:$PATH

6export LD_LIBRARY_PATH=$TPUC_ROOT/lib:$LD_LIBRARY_PATH

7export PYTHONPATH=${TPUC_ROOT}/python:$PYTHONPATH

8export MODEL_ZOO_PATH=${TPUC_ROOT}/../model-zoo

9export REGRESSION_PATH=${TPUC_ROOT}/regression

3.2. Prepare working directory

Create a model_yolov5s directory, note that it is the same level directory as tpu-mlir; and put both model files and image files

into the model_yolov5s directory.

The operation is as follows:

1$ mkdir yolov5s_onnx && cd yolov5s_onnx

2$ cp $TPUC_ROOT/regression/model/yolov5s.onnx .

3$ cp -rf $TPUC_ROOT/regression/dataset/COCO2017 .

4$ cp -rf $TPUC_ROOT/regression/image .

5$ mkdir workspace && cd workspace

$TPUC_ROOT is an environment variable, corresponding to the tpu-mlir_xxxx directory.

3.3. ONNX to MLIR

If the input is image, we need to know the preprocessing of the model before transferring it. If the model uses preprocessed npz files as input, no preprocessing needs to be considered. The preprocessing process is formulated as follows ( \(x\) represents the input):

The image of the official yolov5 is rgb. Each value will be multiplied by 1/255, respectively corresponding to

0.0,0.0,0.0 and 0.0039216,0.0039216,0.0039216 when it is converted into mean and scale.

The model conversion command is as follows:

$ model_transform.py \

--model_name yolov5s \

--model_def ../yolov5s.onnx \

--input_shapes [[1,3,640,640]] \

--mean 0.0,0.0,0.0 \

--scale 0.0039216,0.0039216,0.0039216 \

--keep_aspect_ratio \

--pixel_format rgb \

--output_names 350,498,646 \

--test_input ../image/dog.jpg \

--test_result yolov5s_top_outputs.npz \

--mlir yolov5s.mlir

The main parameters of model_transform.py are described as follows (for a complete introduction, please refer to the user interface chapter of the TPU-MLIR Technical Reference Manual):

Name |

Required? |

Explanation |

|---|---|---|

model_name |

Y |

Model name |

model_def |

Y |

Model definition file (e.g., ‘.onnx’, ‘.tflite’ or ‘.prototxt’ files) |

input_shapes |

N |

Shape of the inputs, such as [[1,3,640,640]] (a two-dimensional array), which can support multiple inputs |

input_types |

N |

Type of the inputs, such int32; separate by ‘,’ for multi inputs; float32 as default |

resize_dims |

N |

The size of the original image to be adjusted to. If not specified, it will be resized to the input size of the model |

keep_aspect_ratio |

N |

Whether to maintain the aspect ratio when resize. False by default. It will pad 0 to the insufficient part when setting |

mean |

N |

The mean of each channel of the image. The default is 0.0,0.0,0.0 |

scale |

N |

The scale of each channel of the image. The default is 1.0,1.0,1.0 |

pixel_format |

N |

Image type, can be rgb, bgr, gray or rgbd |

output_names |

N |

The names of the output. Use the output of the model if not specified, otherwise use the specified names as the output |

test_input |

N |

The input file for validation, which can be an image, npy or npz. No validation will be carried out if it is not specified |

test_result |

N |

Output file to save validation result |

excepts |

N |

Names of network layers that need to be excluded from validation. Separated by comma |

mlir |

Y |

The output mlir file name (including path) |

After converting to an mlir file, a ${model_name}_in_f32.npz file will be generated, which is the input file for the subsequent models.

3.4. MLIR to F16 bmodel

To convert the mlir file to the f16 bmodel, we need to run:

$ model_deploy.py \

--mlir yolov5s.mlir \

--quantize F16 \

--chip bm1684x \

--test_input yolov5s_in_f32.npz \

--test_reference yolov5s_top_outputs.npz \

--tolerance 0.99,0.99 \

--model yolov5s_1684x_f16.bmodel

The main parameters of model_deploy.py are as follows (for a complete introduction, please refer to the user interface chapter of the TPU-MLIR Technical Reference Manual):

Name |

Required? |

Explanation |

|---|---|---|

mlir |

Y |

Mlir file |

quantize |

Y |

Quantization type (F32/F16/BF16/INT8) |

chip |

Y |

The platform that the model will use. Support bm1684x/bm1684/cv183x/cv182x/cv181x/cv180x. |

calibration_table |

N |

The calibration table path. Required when it is INT8 quantization |

tolerance |

N |

Tolerance for the minimum similarity between MLIR quantized and MLIR fp32 inference results |

test_input |

N |

The input file for validation, which can be an image, npy or npz. No validation will be carried out if it is not specified |

test_reference |

N |

Reference data for validating mlir tolerance (in npz format). It is the result of each operator |

compare_all |

N |

Compare all tensors, if set. |

excepts |

N |

Names of network layers that need to be excluded from validation. Separated by comma |

model |

Y |

Name of output model file (including path) |

After compilation, a file named yolov5s_1684x_f16.bmodel is generated.

3.5. MLIR to INT8 bmodel

3.5.1. Calibration table generation

Before converting to the INT8 model, you need to run calibration to get the calibration table. The number of input data is about 100 to 1000 according to the situation.

Then use the calibration table to generate a symmetric or asymmetric bmodel. It is generally not recommended to use the asymmetric one if the symmetric one already meets the requirements, because the performance of the asymmetric model will be slightly worse than the symmetric model.

Here is an example of the existing 100 images from COCO2017 to perform calibration:

$ run_calibration.py yolov5s.mlir \

--dataset ../COCO2017 \

--input_num 100 \

-o yolov5s_cali_table

After running the command above, a file named yolov5s_cali_table will be generated, which is used as the input file for subsequent compilation of the INT8 model.

3.5.2. Compile to INT8 symmetric quantized model

Execute the following command to convert to the INT8 symmetric quantized model:

$ model_deploy.py \

--mlir yolov5s.mlir \

--quantize INT8 \

--calibration_table yolov5s_cali_table \

--chip bm1684x \

--test_input yolov5s_in_f32.npz \

--test_reference yolov5s_top_outputs.npz \

--tolerance 0.85,0.45 \

--model yolov5s_1684x_int8_sym.bmodel

After compilation, a file named yolov5s_1684x_int8_sym.bmodel is generated.

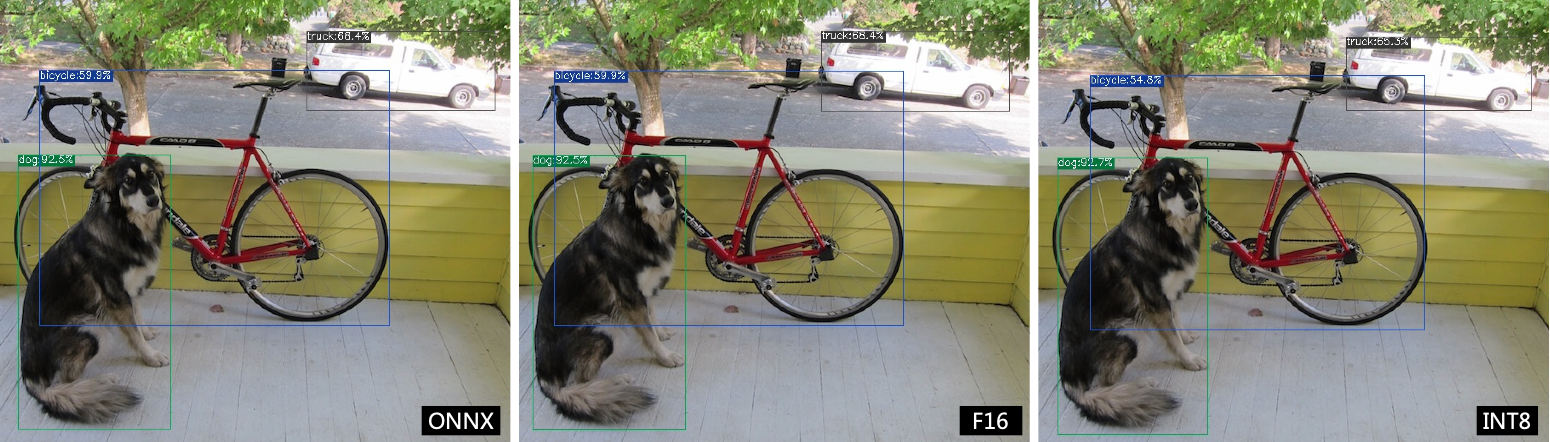

3.6. Effect comparison

There is a yolov5 use case written in python in this release package for object detection on images. The source code path is $TPUC_ROOT/python/samples/detect_yolov5.py. It can be learned how the model is used by reading the code. Firstly, preprocess to get the model’s input, then do inference to get the output, and finally do post-processing.

Use the following codes to validate the inference results of onnx/f16/int8 respectively.

The onnx model is run as follows to get dog_onnx.jpg:

$ detect_yolov5.py \

--input ../image/dog.jpg \

--model ../yolov5s.onnx \

--output dog_onnx.jpg

The f16 bmodel is run as follows to get dog_f16.jpg :

$ detect_yolov5.py \

--input ../image/dog.jpg \

--model yolov5s_1684x_f16.bmodel \

--output dog_f16.jpg

The int8 symmetric bmodel is run as follows to get dog_int8_sym.jpg:

$ detect_yolov5.py \

--input ../image/dog.jpg \

--model yolov5s_1684x_int8_sym.bmodel \

--output dog_int8_sym.jpg

The result images are compared as shown in the figure (Comparison of TPU-MLIR for YOLOv5s’ compilation effect).

Fig. 3.1 Comparison of TPU-MLIR for YOLOv5s’ compilation effect

Due to different operating environments, the final performance will be somewhat different from Fig. 3.1.

3.7. Model performance test

The following operations need to be performed outside of Docker,

3.7.1. Install the libsophon

Please refer to the libsophon manual to install libsophon.

3.7.2. Check the performance of BModel

After installing libsophon, you can use bmrt_test to test the accuracy and performance of the bmodel. You can choose a suitable model by estimating the maximum fps of the model based on the output of bmrt_test.

# Test the bmodel compiled above

# --bmodel parameter followed by bmodel file,

$ cd $TPUC_ROOT/../model_yolov5s/workspace

$ bmrt_test --bmodel yolov5s_1684x_f16.bmodel

$ bmrt_test --bmodel yolov5s_1684x_int8_sym.bmodel

Take the output of the last command as an example (the log is partially truncated here):

1[BMRT][load_bmodel:983] INFO:pre net num: 0, load net num: 1

2[BMRT][show_net_info:1358] INFO: ########################

3[BMRT][show_net_info:1359] INFO: NetName: yolov5s, Index=0

4[BMRT][show_net_info:1361] INFO: ---- stage 0 ----

5[BMRT][show_net_info:1369] INFO: Input 0) 'images' shape=[ 1 3 640 640 ] dtype=FLOAT32

6[BMRT][show_net_info:1378] INFO: Output 0) '350_Transpose_f32' shape=[ 1 3 80 80 85 ] ...

7[BMRT][show_net_info:1378] INFO: Output 1) '498_Transpose_f32' shape=[ 1 3 40 40 85 ] ...

8[BMRT][show_net_info:1378] INFO: Output 2) '646_Transpose_f32' shape=[ 1 3 20 20 85 ] ...

9[BMRT][show_net_info:1381] INFO: ########################

10[BMRT][bmrt_test:770] INFO:==> running network #0, name: yolov5s, loop: 0

11[BMRT][bmrt_test:834] INFO:reading input #0, bytesize=4915200

12[BMRT][print_array:702] INFO: --> input_data: < 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 ...

13[BMRT][bmrt_test:982] INFO:reading output #0, bytesize=6528000

14[BMRT][print_array:702] INFO: --> output ref_data: < 0 0 0 0 0 0 0 0 0 0 0 0 0 0...

15[BMRT][bmrt_test:982] INFO:reading output #1, bytesize=1632000

16[BMRT][print_array:702] INFO: --> output ref_data: < 0 0 0 0 0 0 0 0 0 0 0 0 0 0...

17[BMRT][bmrt_test:982] INFO:reading output #2, bytesize=408000

18[BMRT][print_array:702] INFO: --> output ref_data: < 0 0 0 0 0 0 0 0 0 0 0 0 0 0...

19[BMRT][bmrt_test:1014] INFO:net[yolov5s] stage[0], launch total time is 4122 us (npu 4009 us, cpu 113 us)

20[BMRT][bmrt_test:1017] INFO:+++ The network[yolov5s] stage[0] output_data +++

21[BMRT][print_array:702] INFO:output data #0 shape: [1 3 80 80 85 ] < 0.301003 ...

22[BMRT][print_array:702] INFO:output data #1 shape: [1 3 40 40 85 ] < 0 0.228689 ...

23[BMRT][print_array:702] INFO:output data #2 shape: [1 3 20 20 85 ] < 1.00135 ...

24[BMRT][bmrt_test:1058] INFO:load input time(s): 0.008914

25[BMRT][bmrt_test:1059] INFO:calculate time(s): 0.004132

26[BMRT][bmrt_test:1060] INFO:get output time(s): 0.012603

27[BMRT][bmrt_test:1061] INFO:compare time(s): 0.006514

The following information can be learned from the output above:

Lines 05-08: the input and output information of bmodel

Line 19: running time on the TPU, of which the TPU takes 4009us and the CPU takes 113us. The CPU time here mainly refers to the waiting time of calling at HOST

Line 24: the time to load data into the NPU’s DDR

Line 25: the total time of Line 12

Line 26: the output data retrieval time